Radar radiation source recognition method based on compressed residual network

-

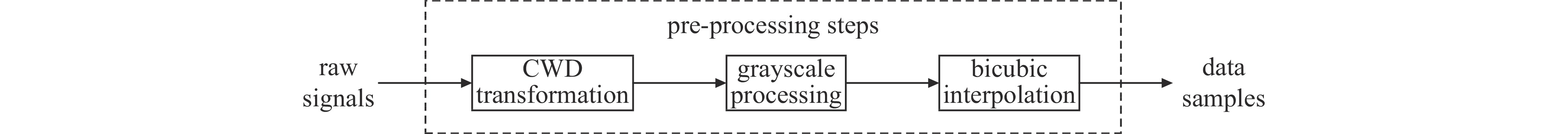

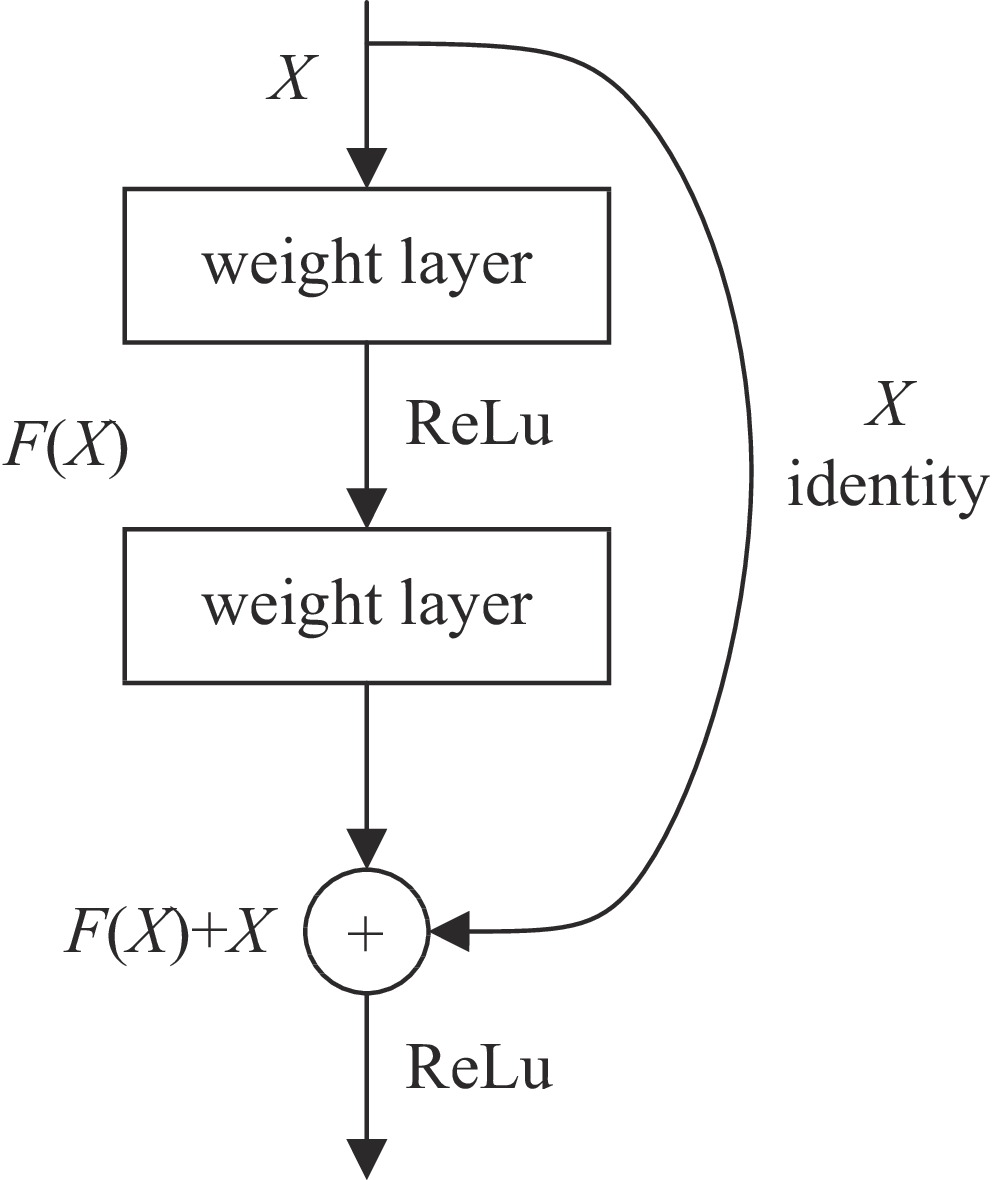

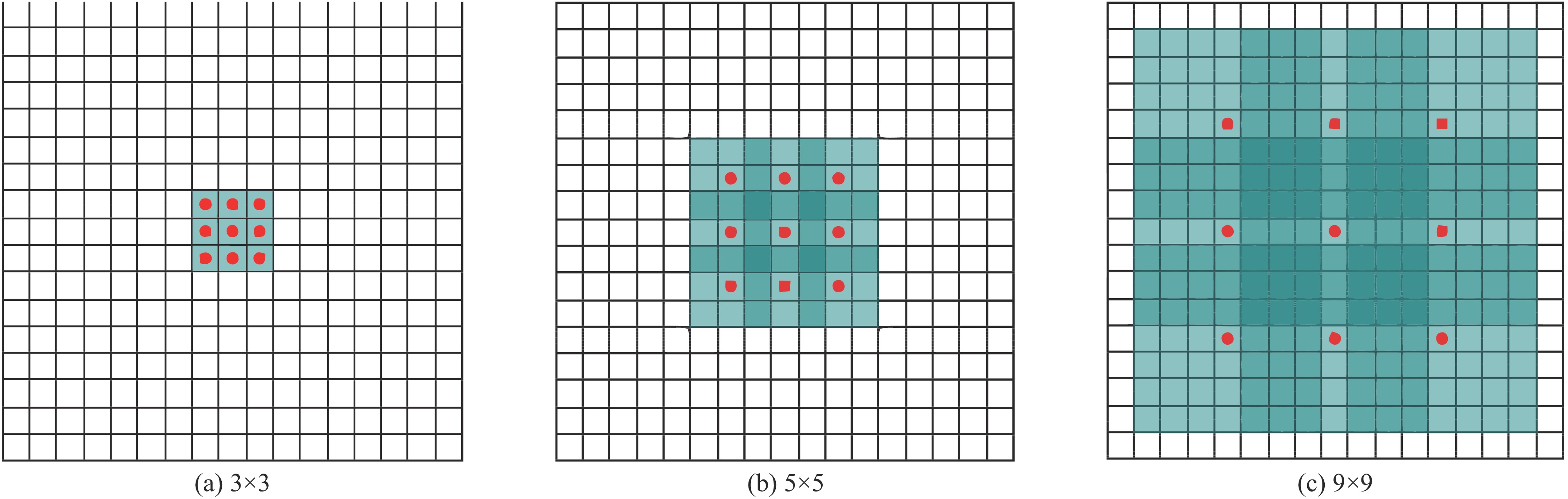

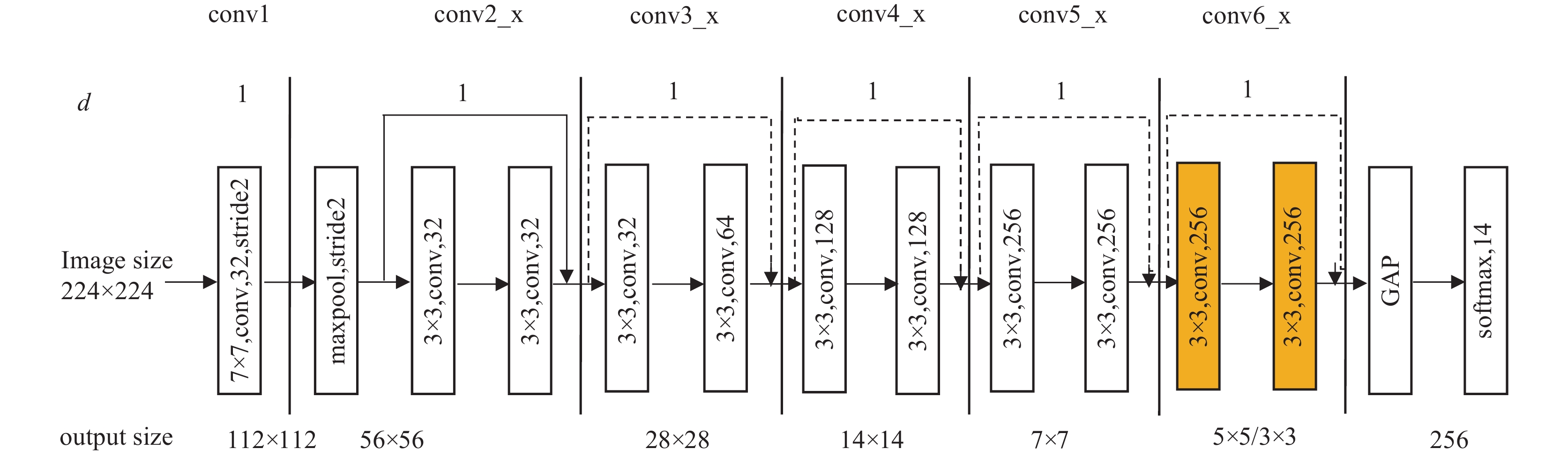

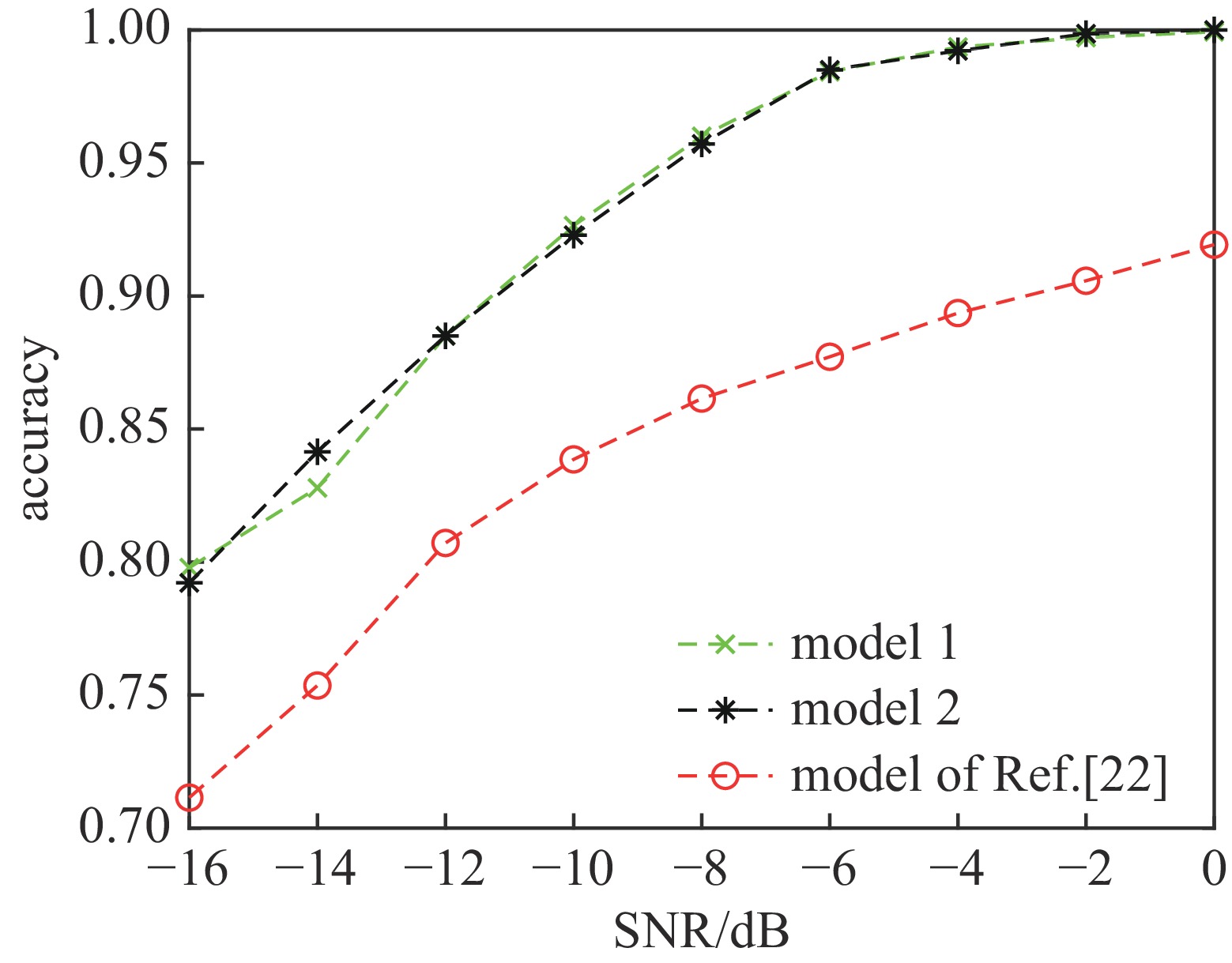

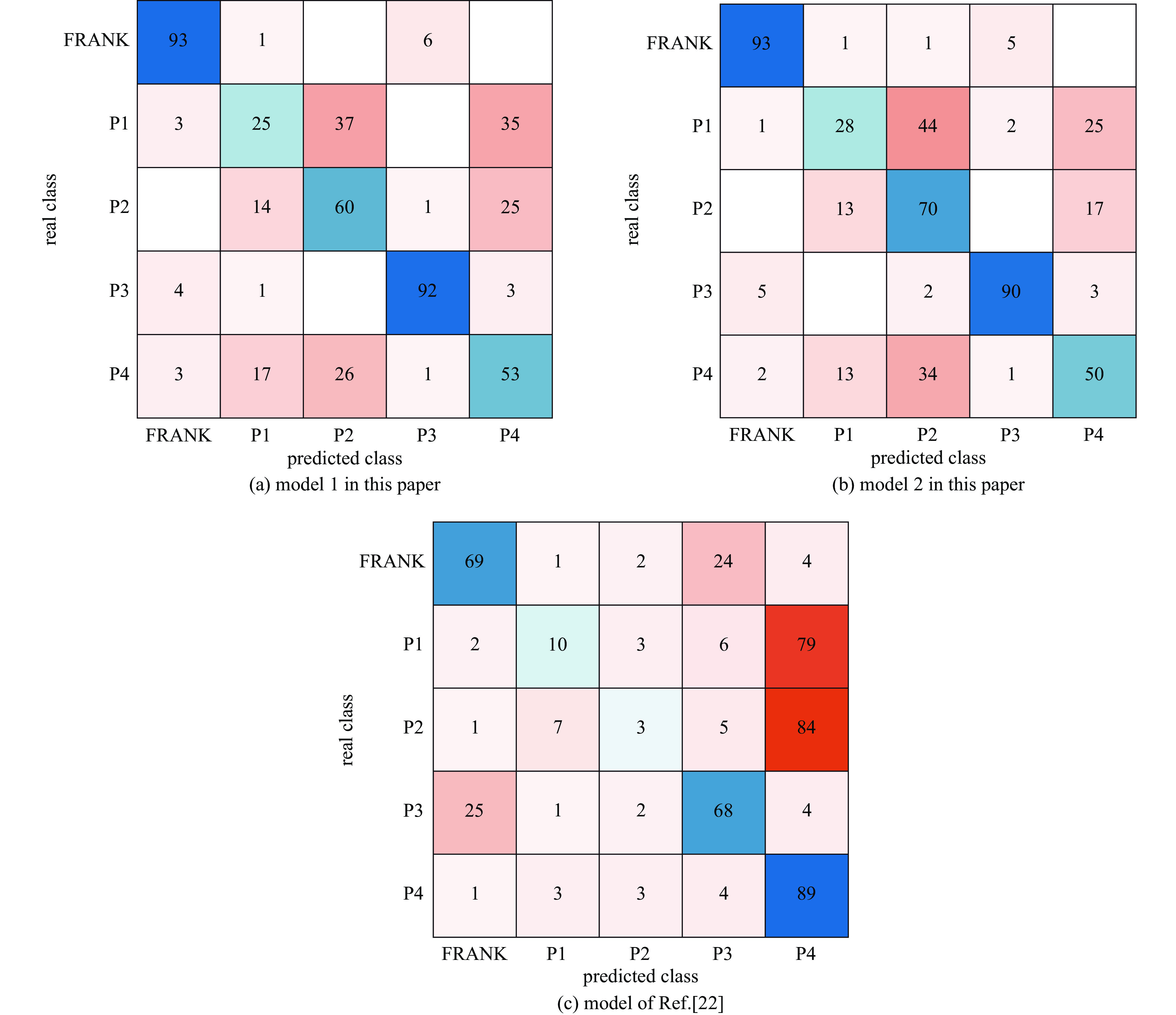

摘要: 针对低信噪比条件下,现有的雷达辐射源信号识别方法存在识别正确率低、时效性差的问题,提出了一种基于压缩残差网络的雷达辐射源信号识别方法。首先,利用Choi-Williams分布的时频分析方法将时域信号转换为二维时频图像;然后,根据应用场景特点,选择卷积神经网络(Convolutional Neural Networks, CNN)“压缩”范围;最后,构建压缩残差网络来自动提取图像特征并完成分类。仿真实验结果表明,在同等体量的设计下,与当前较为常用的标准CNN以及ResNet模型相比,所提模型能够降低信号识别运行时间约88%,在信噪比为−14 dB条件下对14种雷达辐射源信号的平均识别率高约5%。提供了一种高效的雷达辐射源信号智能识别方法,具有潜在的工程应用前景。Abstract: Aiming at the problems of low recognition accuracy and poor timeliness of existing radar emitter signal recognition methods under the condition of low SNR, this paper proposes a radar emitter signal recognition method based on compressed residual network. Using Choi-Williams distribution for reference, the time-domain signal is converted into a two-dimensional time-frequency image, which improves the effectiveness of signal essential feature extraction. According to the characteristics of the application scenario, it selects the “compression” range of convolutional neural networks (CNN), and builds a compression residual network to automatically extract image features and identify. The simulation results show that compared with other advanced models, the proposed method can reduce the running time of signal recognition by about 88%, and the average recognition rate of 14 radar emitter signals is at least 5% higher when the signal-to-noise ratio is −14 dB. This paper provides an efficient intelligent recognition method of radar emitter signal, which has potential engineering application prospects.

-

表 1 信号参数设置

Table 1. Signal parameter setting

modulation type parameter ranges CW carrier frequency $ {f_0} $ [1/10,1/4]fs LFM、NLFM carrier frequency $ {f_0} $ [1/10,1/4]fs bandwidth B [1/10,1/4]fs BPSK carrier frequency $ {f_0} $ [1/10,1/4]fs barker code length 7 QPSK carrier frequency $ {f_0} $ [1/10,1/4]fs phase coding sequence length 6 FMCW carrier frequency $ {f_0} $ [1/20,1/5]fs bandwidth B [1/20,1/5]fs cycle T 1$ {\text{μ s}} $ FRANK、P1, P2, P3, P4 carrier frequency $ {f_0} $ [1/10,1/4]fs step frequency N {6,8} BFSK carrier frequency $ {f_0} $ [1/20,1/4]fs barker code length {11,13} QFSK carrier frequency $ {f_0} $ [1/10,1/4]fs frequency coding sequence length {6,7} COSTAS carrier frequency $ {f_0} $ [1/10,1/4]fs frequency coding sequence length {6,7,8} 表 2 不同模型各卷积运算需要的乘加计算量

Table 2. Multi-adds calculation amount required for each convolution operation of different models

model structure FLOPS model 2 of this article Ref. [22] model conv1 112×112×1×32×7×7 19668992 112×112×1×32×7×7 19668992 conv2_x 56×56×32×32×3×3×2 57802752 56×56×32×32×3×3×2 57802752 conv3_x 28×28×32×64×3×3+28×28×64×64×3×3 43352064 28×28×32×64×3×3+28×28×64×64×3×3 43352064 conv4_x 14×14×64×128×3×3+14×14×128×128×3×3 43352064 28×28×64×128×3×3+28×28×128×128×3×3 173408256 conv5_x 7×7×128×256×3×3+7×7×256×256×3×3 43352064 28×28×128×256×3×3+28×28×256×256×3×3 693633024 conv6_x 3×3×256×256×3×3×2 10616832 28×28×256×256×3×3×2 924844032 fc 256×14 3584 256×14 3584 sum 218, 148, 352 1, 912, 712, 704 -

[1] López-Risueño G, Grajal J, Sanz-Osorio A. Digital channelized receiver based on time-frequency analysis for signal interception[J]. IEEE Transactions on Aerospace and Electronic Systems, 2005, 41(3): 879-898. doi: 10.1109/TAES.2005.1541437 [2] Zhang Ming, Diao Ming, Gao Lipeng, et al. Neural networks for radar waveform recognition[J]. Symmetry, 2017, 9(5): 75. doi: 10.3390/sym9050075 [3] Kishore T R, Rao K D. Automatic intrapulse modulation classification of advanced LPI radar waveforms[J]. IEEE Transactions on Aerospace and Electronic Systems, 2017, 53(2): 901-914. doi: 10.1109/TAES.2017.2667142 [4] Zilberman E R, Pace P E. Autonomous time-frequency morphological feature extraction algorithm for LPI radar modulation classification[C]//2006 International Conference on Image Processing. 2006: 2321-2324. [5] Guo Qiang, Nan Pulong, Zhang Xiaoyu, et al. Recognition of radar emitter signals based on SVD and AF main ridge slice[J]. Journal of Communications and Networks, 2015, 17(5): 491-498. doi: 10.1109/JCN.2015.000087 [6] Zhu Ming, Jin Weidong, Pu Yunwei, et al. Classification of radar emitter signals based on the feature of time-frequency atoms[C]//2007 International Conference on Wavelet Analysis and Pattern Recognition. 2007: 1232-1236. [7] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition[DB/OL]. arXiv preprint arXiv: 1409.1556, 2015. [8] Tompson J, Goroshin R, Jain A, et al. Efficient object localization using convolutional networks[C]//2015 IEEE Conference on Computer Vision and Pattern Recognition. 2015: 648-656. [9] Sainath T N, Kingsbury B, Mohamed A R, et al. Improvements to deep convolutional neural networks for LVCSR[C]//2013 IEEE Workshop on Automatic Speech Recognition and Understanding. 2013: 315-320. [10] Lecun Y, Bengio Y, Hinton G. Deep learning[J]. Nature, 2015, 521(7553): 436-444. doi: 10.1038/nature14539 [11] LeCun Y, Bottou L, Bengio Y, et al. Gradient-based learning applied to document recognition[J]. Proceedings of the IEEE, 1998, 86(11): 2278-2324. doi: 10.1109/5.726791 [12] Rumelhart D E, Hinton G E, Williams R J. Learning representations by back-propagating errors[J]. Nature, 1986, 323(6088): 533-536. doi: 10.1038/323533a0 [13] Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks[C]//Proceedings of the 25th International Conference on Neural Information Processing Systems. 2012: 1097-1105. [14] Szegedy C, Liu Wei, Jia Yangqing, et al. Going deeper with convolutions[C]//2015 IEEE Conference on Computer Vision and Pattern Recognition. 2015: 1-9. [15] He Kaiming, Zhang Xiangyu, Ren Shaoqing, et al. Deep residual learning for image recognition[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition. 2016: 770-778. [16] Zhang Ming, Diao Ming, Guo Limin. Convolutional neural networks for automatic cognitive radio waveform recognition[J]. IEEE Access, 2017, 5: 11074-11082. doi: 10.1109/ACCESS.2017.2716191 [17] Zhang Ming, Liu Lutao, Diao Ming. LPI radar waveform recognition based on time-frequency distribution[J]. Sensors, 2016, 16(10): 1682. doi: 10.3390/s16101682 [18] Kong S H, Kim M, Hoang L M, et al. Automatic LPI radar waveform recognition using CNN[J]. IEEE Access, 2018, 6: 4207-4219. doi: 10.1109/ACCESS.2017.2788942 [19] Peng Shengliang, Jiang Hanyu, Wang Huaxia, et al. Modulation classification based on signal constellation diagrams and deep learning[J]. IEEE Transactions on Neural Networks and Learning Systems, 2019, 30(3): 718-727. doi: 10.1109/TNNLS.2018.2850703 [20] Gao Jingpeng, Shen Liangxi, Gao Lipeng. Modulation recognition for radar emitter signals based on convolutional neural network and fusion features[J]. Transactions on Emerging Telecommunications Technologies, 2019, 30(12): e3612. doi: 10.1002/ett.3612 [21] Kumar Y, Sheoran M, Jajoo G, et al. Automatic modulation classification based on constellation density using deep learning[J]. IEEE Communications Letters, 2020, 24(6): 1275-1278. doi: 10.1109/LCOMM.2020.2980840 [22] 秦鑫, 黄洁, 查雄, 等. 基于扩张残差网络的雷达辐射源信号识别[J]. 电子学报, 2020, 48(3):456-462 doi: 10.3969/j.issn.0372-2112.2020.03.006Qin Xin, Huang Jie, Zha Xiong, et al. Radar emitter signal recognition based on dilated residual network[J]. Acta Electronica Sinica, 2020, 48(3): 456-462 doi: 10.3969/j.issn.0372-2112.2020.03.006 [23] Yu F, Koltun V, Funkhouser T. Dilated residual networks[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition. 2017: 636-644. [24] Lin Min, Chen Qiang, Yan Shuicheng. Network in network[DB/OL]. arXiv preprint arXiv: 1312.4400, 2014. [25] Dos Santos C F G, Papa J P. Avoiding overfitting: A survey on regularization methods for convolutional neural networks[J]. ACM Computing Surveys, 2022, 54: 213. [26] Wang Panqu, Chen Pengfei, Yuan Ye, et al. Understanding convolution for semantic segmentation[C]//2018 IEEE Winter Conference on Applications of Computer Vision (WACV). 2018: 1451-1460. [27] Ioffe S, Szegedy C. Batch normalization: Accelerating deep network training by reducing internal covariate shift[C]//Proceedings of the 32nd International Conference on Machine Learning. 2015: 448-456. [28] Glorot X, Bordes A, Bengio Y. Deep sparse rectifier neural networks[C]//Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics. 2011: 315-323. [29] Zhang Xiangyu, Zhou Xinyu, Lin Mengxiao, et al. ShuffleNet: An extremely efficient convolutional neural network for mobile devices[C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2018: 6848-6856. [30] Sandler M, Howard A, Zhu Menglong, et al. MobileNetV2: Inverted residuals and linear bottlenecks[C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2018: 4510-4520. -

下载:

下载: