Siamese single-object tracking algorithm based on multiple attention mechanisms and response fusion

-

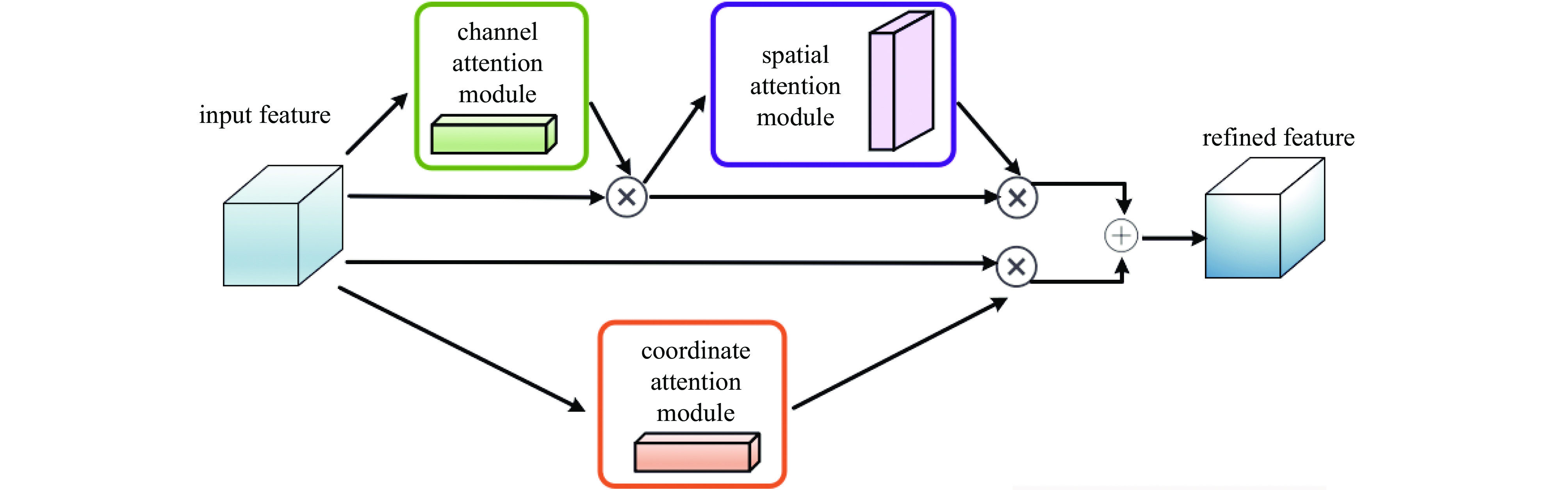

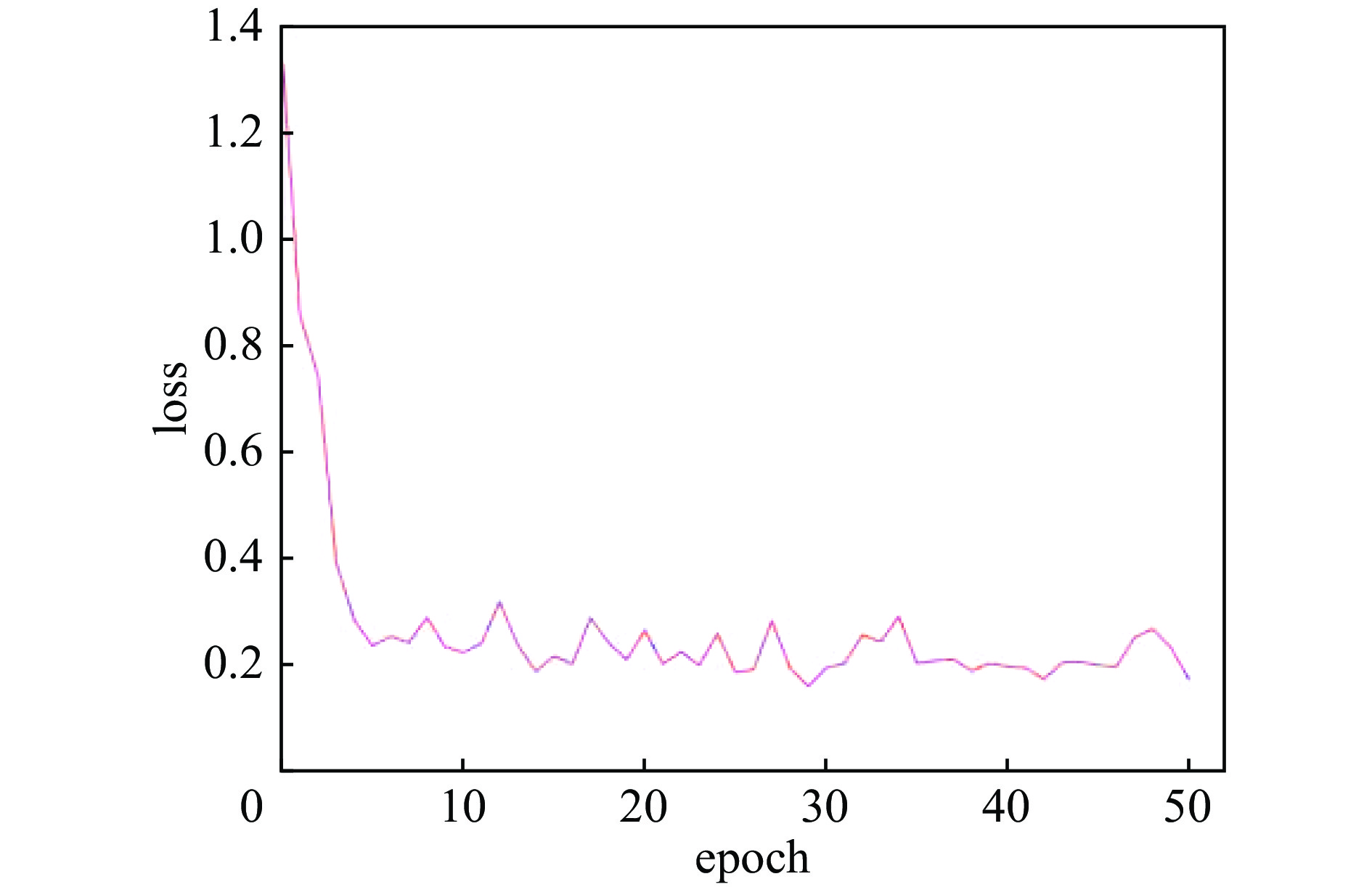

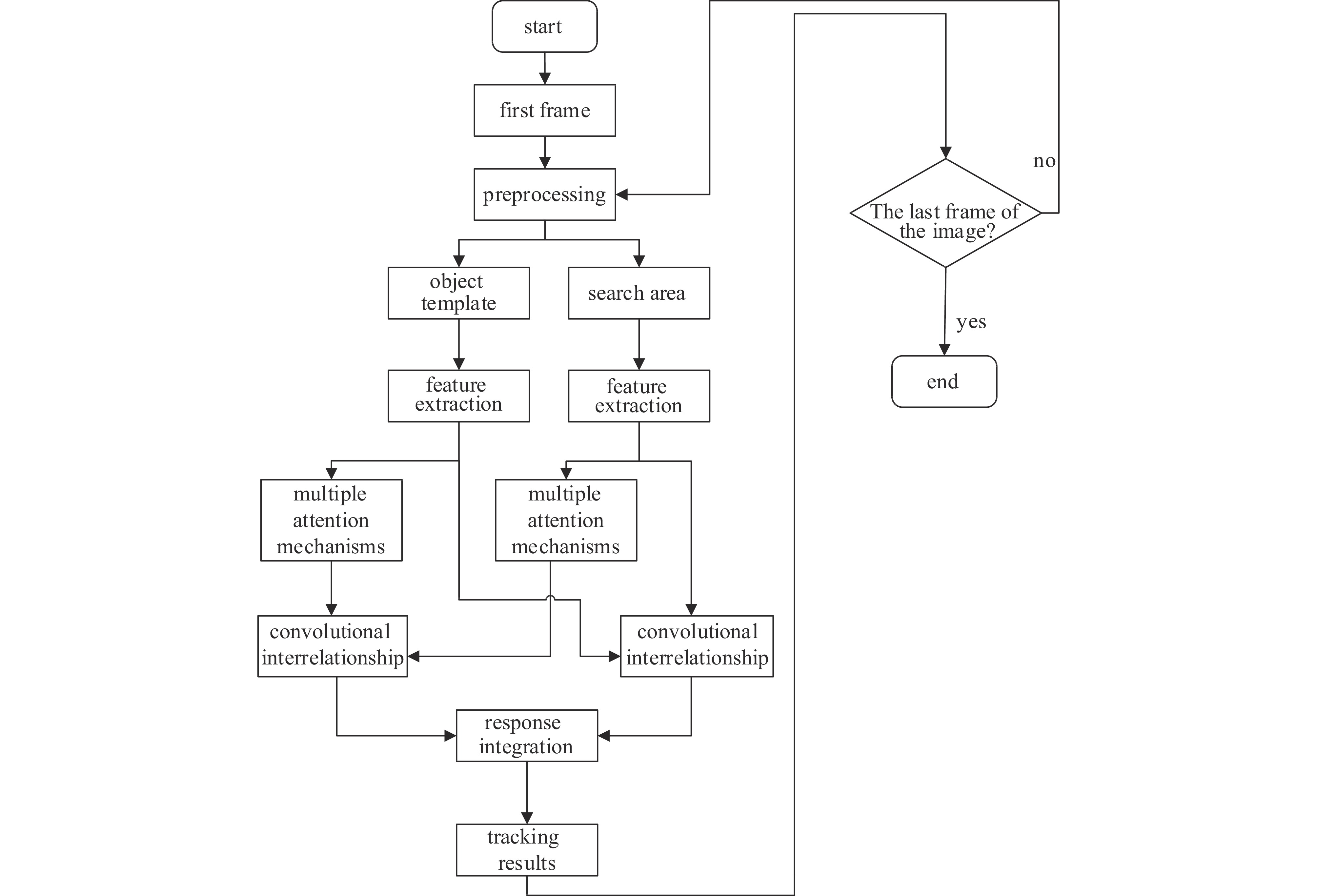

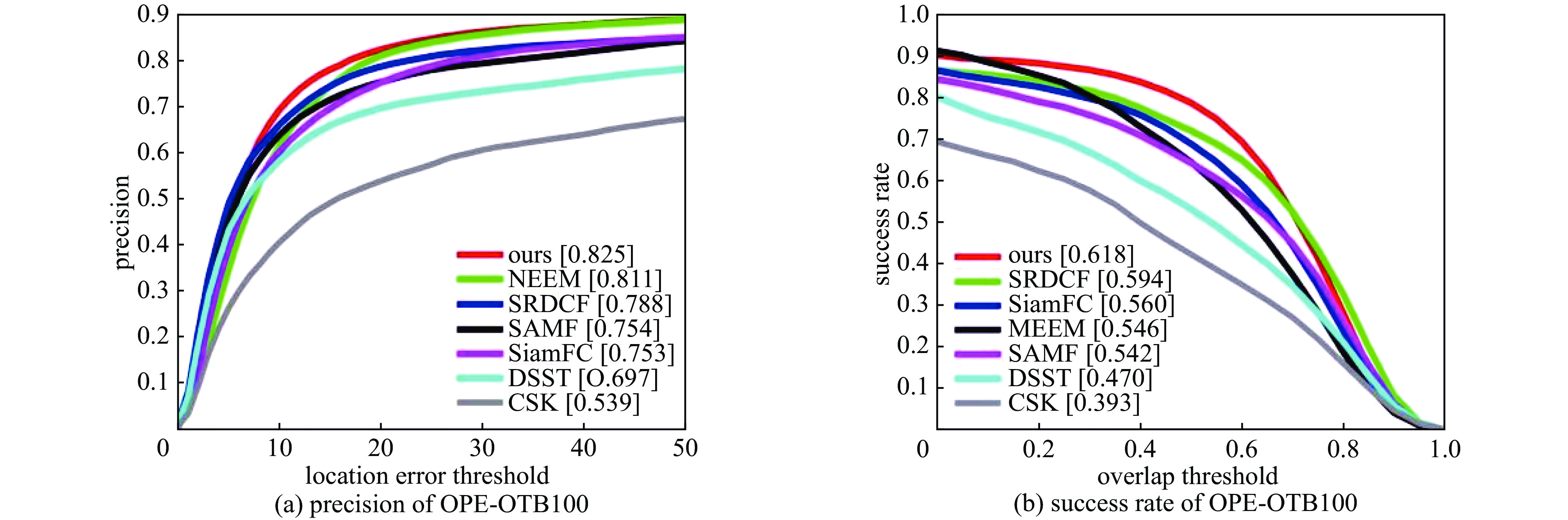

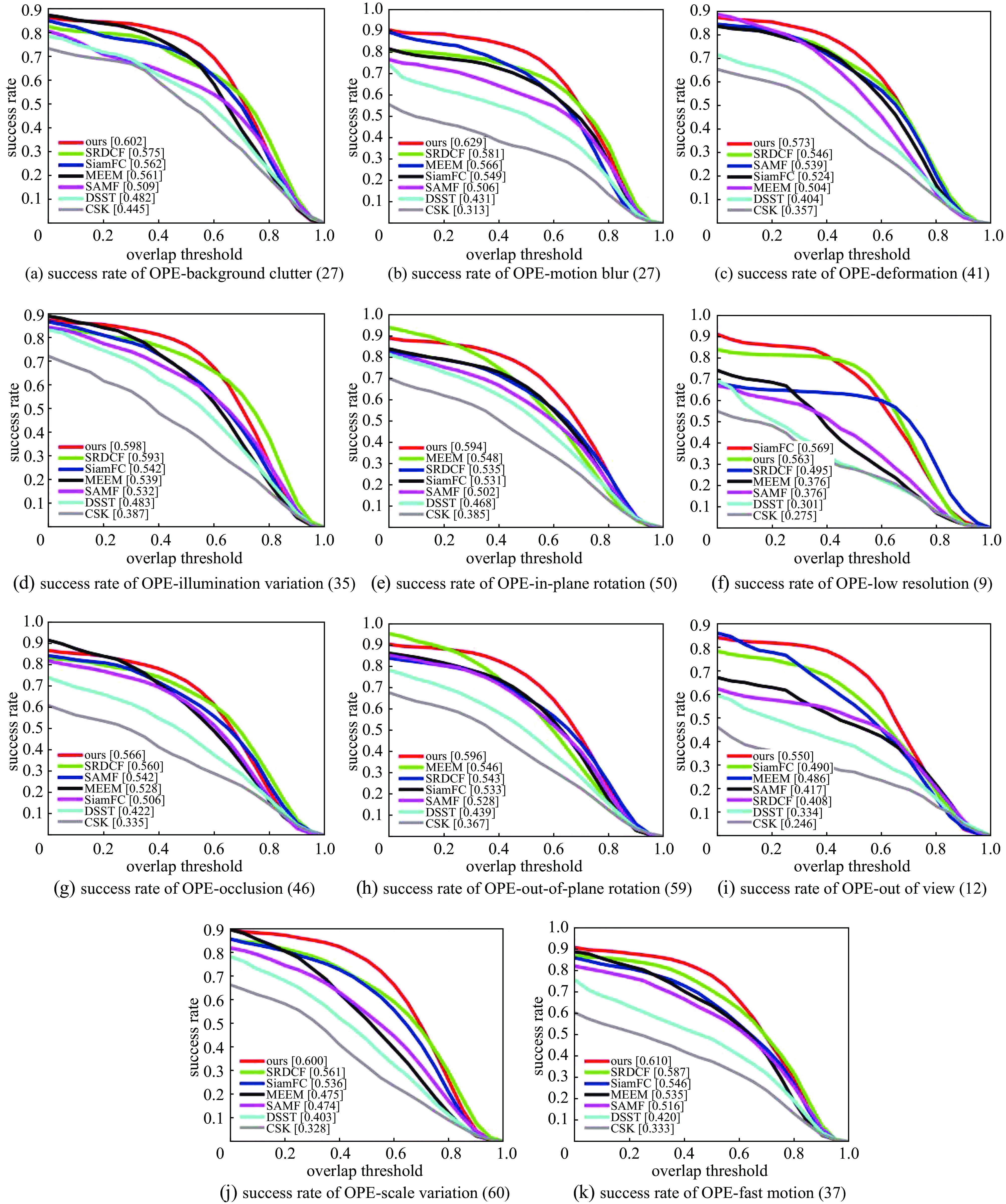

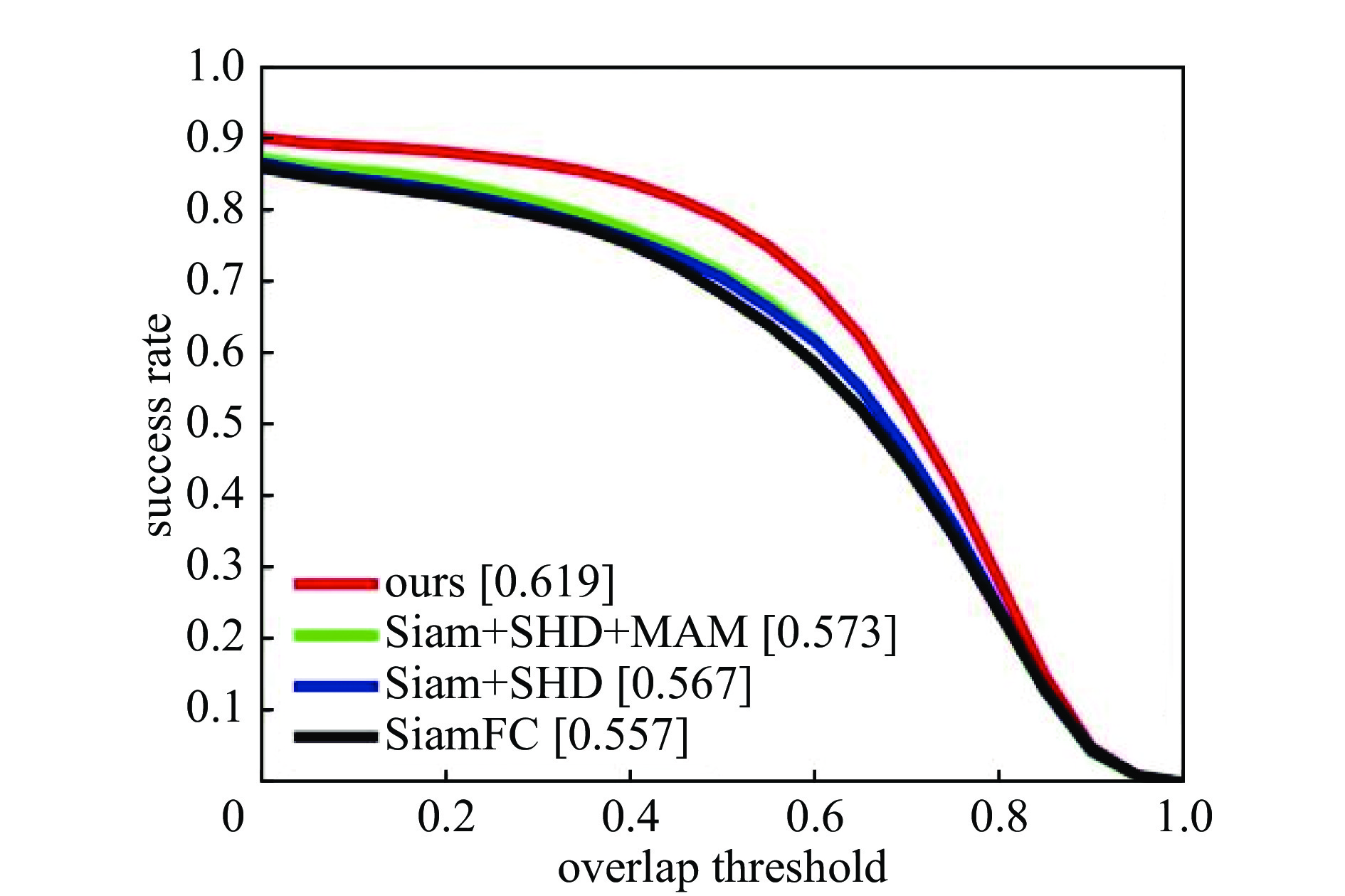

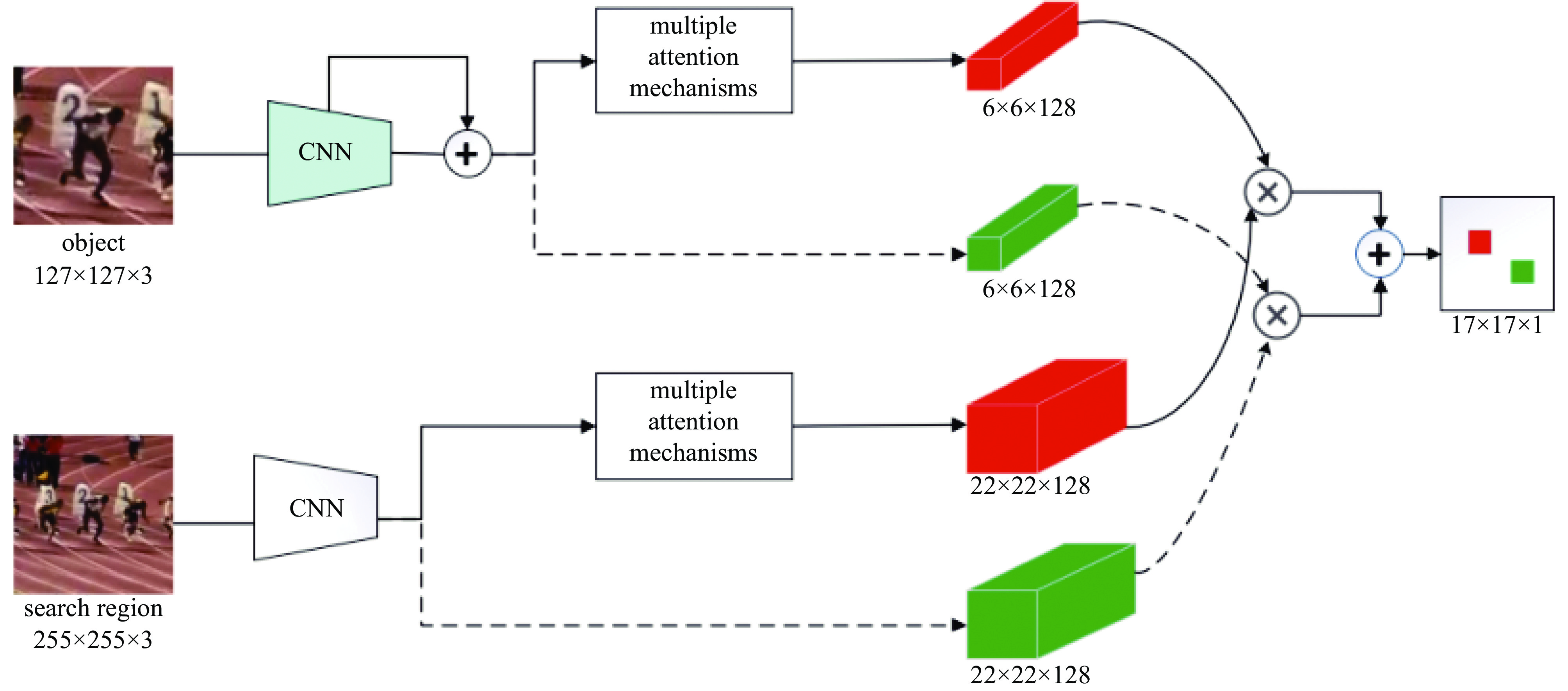

摘要: 针对孪生全卷积网络的单目标跟踪算法因无法提取到目标的高层语义特征和无法一次性集中关注并学习到目标的通道、空间及坐标特征导致在复杂场景下面临目标形变、姿态变化及背景干扰等挑战时,出现跟踪性能下降以及跟踪失败的问题,提出了一种基于多重注意力机制与响应融合的孪生网络单目标跟踪算法用来解决这一问题。在该算法中设计了小卷积核与跳层连接特征融合的深层骨干特征提取网络、改进型注意力机制及卷积互相关后的响应融合运算这3个模块用来提升该算法的跟踪性能,并通过消融实验验证了这3个模块的有效性。最后,经在OTB100基准数据集上测试,跟踪精确度达到了0.825,跟踪成功率达到了0.618。同时与其他先进算法进行对比,结果表明该算法不仅可以有效应对复杂场景下目标跟踪算法性能下降的问题,还可以在保证跟踪速度的前提下,进一步提高跟踪的精度。Abstract: In this paper, to address the problem that the single-object tracking algorithm of Siamese fully convolutional networks cannot extract the high-level semantic features of the object and cannot focus on and learn the channel, spatial and coordinate features of the object at one time, which leads to degradation of the tracking performance and tracking failures when faced with the challenges of the object's deformation, attitude changes, and background interference in a complex scenario, we propose a single-object tracking algorithm for Siamese networks based on the multiple-attention mechanism and response fusion. In this algorithm, three modules, namely, the backbone feature extraction network with small convolutional kernel fused with jump-layer connected features, the improved attention mechanism, and the response fusion operation after convolutional inter-correlation are designed to enhance the tracking performance of this algorithm, and the effectiveness of these three modules is verified by ablation experiments. Finally, after testing on the OTB100 benchmark dataset, the tracking accuracy reaches 0.825, and the tracking success rate reaches 0.618. Meanwhile, compared with other advanced algorithms, it shows that the algorithm not only can effectively cope with the problem of decreasing performance of object tracking algorithms in complex scenarios, but also can further improve the tracking accuracy under the premise of guaranteeing the tracking speed.

-

Key words:

- Siamese networks /

- single-object tracking /

- attention mechanism /

- feature response /

- fusion

-

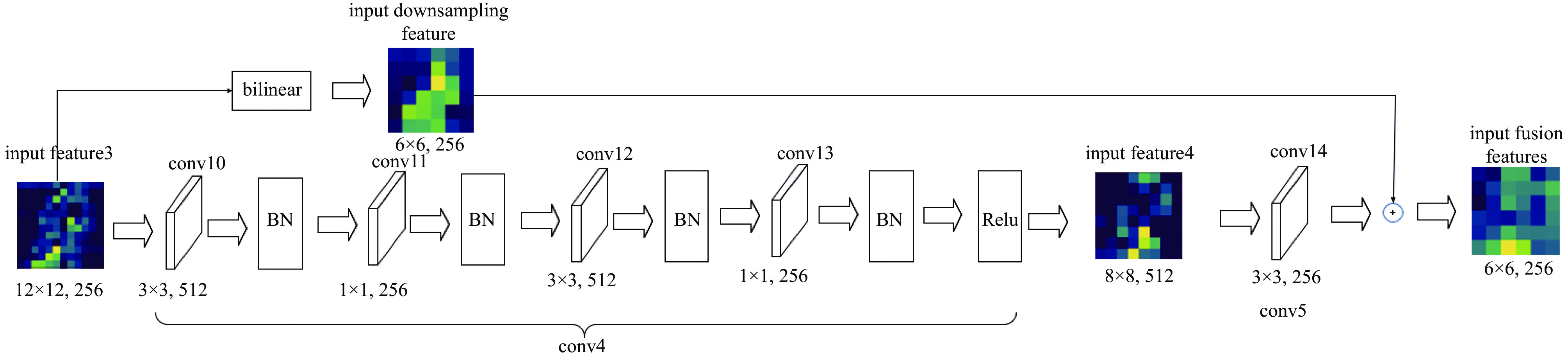

表 1 新的骨干特征提取网络信息

Table 1. New backbone features to extract network information

definition layer layer convolution kernel stride/channel object template size search area size input /3 127×127 255×255 conv1 conv1-BN 3×3 1/64 125×125 253×253 conv2-BN 3×3 1/128 123×123 251×251 conv3-BN-ReLu 1×1 1/64 123×123 251×251 MaxPool 2×2 2/64 61×61 125×125 conv2 conv4-BN 3×3 1/128 59×59 123×123 conv5-BN 1×1 1/64 59×59 123×123 conv6-BN-ReLu 3×3 1/128 57×57 121×121 MaxPool 2×2 2/128 28×28 60×60 conv3 conv7-BN 3×3 1/256 26×26 58×58 conv8-BN 1×1 1/128 26×26 58×58 conv9-BN-ReLu 3×3 1/256 24×24 56×56 MaxPool 2×2 2/256 12×12 28×28 conv4 conv10-BN 3×3 1/512 10×10 26×26 conv11-BN 1×1 1/256 10×10 26×26 conv12-BN 3×3 1/512 8×8 24×24 conv13-BN-ReLu 1×1 1/256 8×8 24×24 conv5 conv14 3×3 1/256 6×6 22×22 表 2 跟踪速度对比

Table 2. Tracking speed comparison

arithmetic average running speed/(frame/s) ours 60 SiamFC 86 MEEM 6 SRDCF 4 SAMF 7 DSST 25 CSK 362 -

[1] 程旭, 崔一平, 宋晨, 等. 基于时空注意力机制的目标跟踪算法[J]. 计算机科学, 2021, 48(4):123-129 doi: 10.11896/jsjkx.200800164Cheng Xu, Cui Yiping, Song Chen, et al. Object tracking algorithm based on temporal-spatial attention mechanism[J]. Computer Science, 2021, 48(4): 123-129 doi: 10.11896/jsjkx.200800164 [2] Zhao Jianguang, Cao Xinying. Research on target tracking algorithm in occlusion scene[J]. Journal of Physics: Conference Series, 2021, 1748: 032048. doi: 10.1088/1742-6596/1748/3/032048 [3] 尹宏鹏, 陈波, 柴毅, 等. 基于视觉的目标检测与跟踪综述[J]. 自动化学报, 2016, 42(10):1466-1489Yin Hongpeng, Chen Bo, Chai Yi, et al. Vision-based object detection and tracking: a review[J]. Acta Automatica Sinica, 2016, 42(10): 1466-1489 [4] Zheng Chenyang, Usagawa T. A rapid webcam-based eye tracking method for human computer interaction[C]//IEEE International Conference on Control, Automation and Information Sciences. 2018: 133-136. [5] Li Kai, Cheng Jun, Zhang Qieshi, et al. Hand gesture tracking and recognition based human-computer interaction system and its applications[C]//IEEE International Conference on Information and Automation. 2018: 667-672. [6] Huang Kaiqi, Tan Tieniu. Vs-star: a visual interpretation system for visual surveillance[J]. Pattern Recognition Letters, 2010, 31(14): 2265-2285. doi: 10.1016/j.patrec.2010.05.029 [7] Haritaoglu I, Harwood D, Davis L S. W4: real-time surveillance of people and their activities[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2000, 22(8): 809-830. doi: 10.1109/34.868683 [8] Shah M, Javed O, Shafique K. Automated visual surveillance in realistic scenarios[J]. IEEE MultiMedia, 2007, 14(1): 30-39. doi: 10.1109/MMUL.2007.3 [9] Nalepka J P, Hinchman J L. Automated aerial refueling: extending the effectiveness of unmanned air vehicles[C]//AIAA Modeling and Simulation Technologies Conference and Exhibit. 2005. [10] Sharp C S, Shakernia O, Sastry S S. A vision system for landing an unmanned aerial vehicle[C]//IEEE International Conference on Robotics and Automation. 2001: 1720-1727. [11] Xu Guili, Zhang Yong, Ji Shengyu, et al. Research on computer vision-based for UAV autonomous landing on a ship[J]. Pattern Recognition Letters, 2009, 30(6): 600-605. doi: 10.1016/j.patrec.2008.12.011 [12] Bertinetto L, Valmadre J, Henriques J F, et al. Fully-convolutional Siamese networks for object tracking[C]//Proceedings of the European Conference on Computer Vision (ECCV). 2016: 850-865. [13] 韩自强, 岳明凯, 张骢, 等. 基于孪生网络的无人机目标多模态融合检测[J]. 红外技术, 2023, 45(7):739-745Han Ziqiang, Yue Mingkai, Zhang Cong, et al. Multimodal fusion detection of UAV target based on Siamese network[J]. Infrared Technology, 2023, 45(7): 739-745 [14] 贺泽民, 曾俊涛, 袁宝玺, 等. 视觉跟踪技术中孪生网络的研究进展[J]. 液晶与显示, 2024, 39(2):192-204 doi: 10.37188/CJLCD.2023-0113He Zemin, Zeng Juntao, Yuan Baoxi, et al. Advances in twin network research in visual tracking technology[J]. Chinese Journal of Liquid Crystals and Displays, 2024, 39(2): 192-204 doi: 10.37188/CJLCD.2023-0113 [15] Li Peixia, Wang Dong, Wang Lijun, et al. Deep visual tracking: review and experimental comparison[J]. Pattern Recognition, 2018, 76: 323-338. doi: 10.1016/j.patcog.2017.11.007 [16] Zhang Xuebai, Liu Xiaolong, Yuan S M, et al. Eye tracking based control system for natural human-computer interaction[J]. Computational Intelligence and Neuroscience, 2017, 2017: 5739301. [17] 李玺, 查宇飞, 张天柱, 等. 深度学习的目标跟踪算法综述[J]. 中国图象图形学报, 2019, 24(12):2057-2080 doi: 10.11834/jig.190372Li Xi, Zha Yufei, Zhang Tianzhu, et al. Survey of visual object tracking algorithms based on deep learning[J]. Journal of Image and Graphics, 2019, 24(12): 2057-2080 doi: 10.11834/jig.190372 [18] Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks[C]//Proceedings of the 25th International Conference on Neural Information Processing Systems. 2012: 1097-1105. [19] Hou Qibin, Zhou Daquan, Feng Jiashi. Coordinate attention for efficient mobile network design[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2021: 13708-13717. [20] Woo S, Park J, Lee J Y, et al. CBAM: convolutional block attention module[C]//Proceedings of the 15th European Conference on Computer Vision (ECCV). 2018: 3-19. [21] Zhu Xizhou, Cheng Dazhi, Zhang Zheng, et al. An empirical study of spatial attention mechanisms in deep networks[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. 2019: 6687-6696. [22] Huang Lianghua, Zhao Xin, Huang Kaiqi. GOT-10k: a large high-diversity benchmark for generic object tracking in the wild[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(5): 1562-1577. doi: 10.1109/TPAMI.2019.2957464 [23] Wu Yi, Lim J, Yang M H. Object tracking benchmark[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1834-1848. doi: 10.1109/TPAMI.2014.2388226 -

下载:

下载: